Biometric mass surveillance involves the indiscriminate and/or arbitrary monitoring, tracking, and processing of biometric data related to individuals and/or groups. Biometric data encompasses, but is not limited to, fingerprints, palmprints, palm veins, hand geometry, facial recognition, DNA, iris recognition, typing rhythm, walking style, and voice recognition.

Though often targeted at specific groups, the use of mass surveillance technologies is becoming prevalent in publicly available spaces across Europe. As a result, football fans are increasingly impacted by them.

Apart from its undemocratic nature, there are many reasons why biometric mass surveillance is problematic for human rights and fans’ rights.

Firstly, in the general sense, the practices around biometric mass surveillance in and around stadia involve the collection of personal data, which may be shared with third parties and/or stored insecurely. All of this biometric data can be used in the service of mass surveillance.

Secondly, fans’ culture is under threat because mass surveillance can be deployed to control or deter many of the core elements that bring people together in groups and in stadia. To be sure, biometric mass surveillance can create a ‘chilling effect’ on individuals. Knowing one is being surveilled can lead people to feel discouraged from legitimately attending pre-match gatherings and fan marches, or joining a protest.

Moreover, women, people of colour, and fans who belong to the LGBT+ community may be at higher risk of being targeted or profiled.

Football Supporters Europe (FSE) highlighted these problems earlier in the year:

“There are two good reasons why fans should pay close attention to the question of biometric mass surveillance. First, we have a right to privacy, association, and expression, just like everybody else. And second, we’re often used as test subjects for invasive technologies and practices. With this in mind, we encourage fans to work at the local, national, and European levels to make sure that everybody’s fundamental rights are protected from such abuses.”

Football fans and mass surveillance

The situation differs from country to country, but there are countless examples of fans being subjected to intrusive, or in some cases, unauthorised, surveillance:

- Belgium: In 2018, second-tier club RWD Molenbeek announced plans to deploy facial recognition technology to improve queuing times at turnstiles.

- Denmark: Facial recognition technology is used for ticketing verification at the Brøndby Stadion. The supplier claims that the Panasonic FacePro system can recognise people even if they wear sunglasses.

- France: FC Metz allegedly used an experimental system to identify people who were subject to civil stadium bans, detect abandoned objects, and enhance counter-terror measures. Following several reports, the French data protection watchdog (CNIL) carried out an investigation which determined that the system relied on the processing of biometric data. In February 2021, CNIL ruled the use of facial recognition technology in the stadium to be unlawful.

- Hungary: In 2015, the Hungarian Civil Liberties Union (HCUL) filed a complaint at the constitutional court challenging the use of palm “vein” scanners at some football stadia after fans of several clubs objected to the practice.

- The Netherlands: In 2019, AFC Ajax and FC Den Bosch outlined plans to use facial recognition technology to validate and verify e-tickets.

- Spain: Atlético Madrid declared their intention to use facial recognition systems and implement cashless payments from the 2022-23 season onwards. Valencia, meanwhile, have already deployed facial recognition technology designed by FacePhi to monitor and control access to their stadium. Several clubs, including Sevilla FC, also use fingerprint scanning to identify season ticket holders at turnstiles.

- United Kingdom: In 2016, football fans and other community groups successfully campaigned against the introduction of facial recognition technology at Scottish football stadia. Soon after, South Wales Police began using facial recognition systems at football games to “prevent disorder”. According to the BBC, the use of the technology at the 2017 Champions League final in Cardiff led to 2,000 people being “wrongly identified as possible criminals”. In 2019 and 2020, Cardiff City and Swansea City fans joined forces to oppose its’ use considering it “completely unnecessary and disproportionate”.

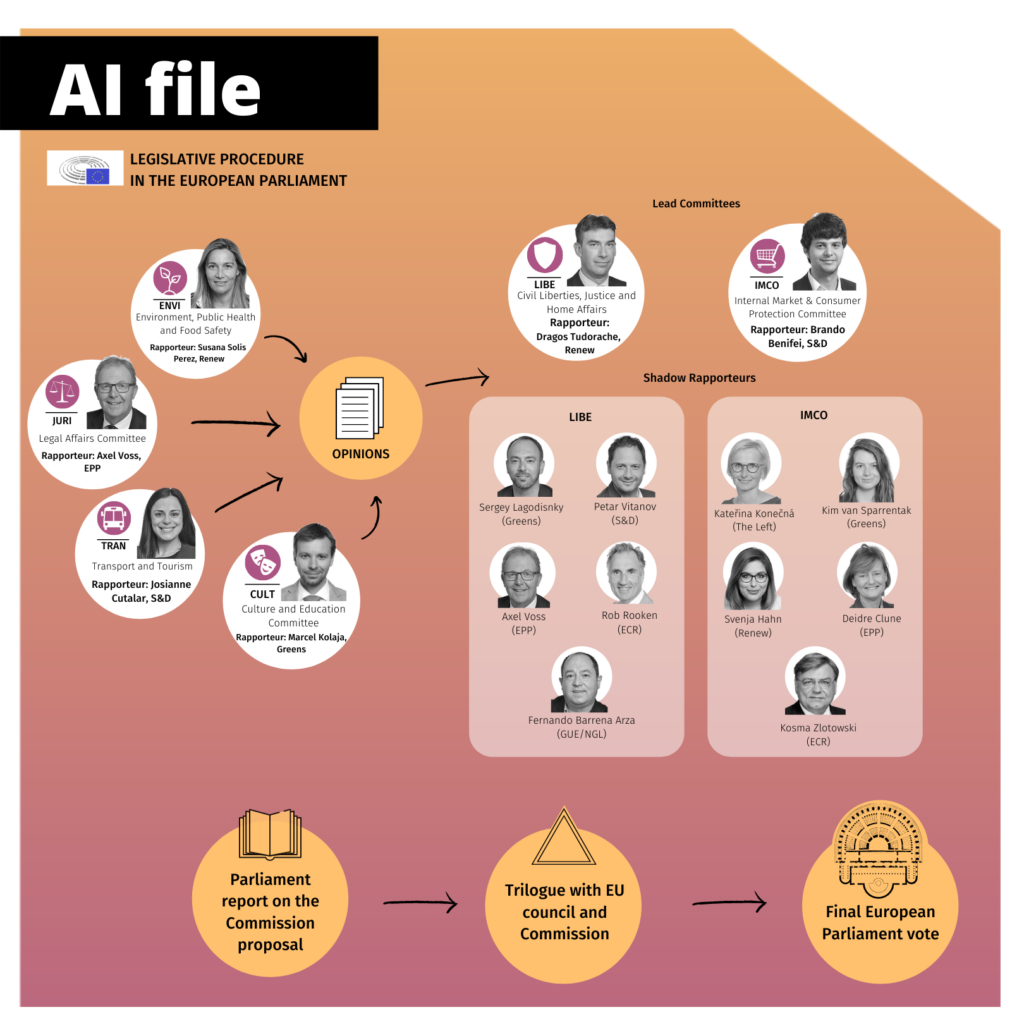

EU AI Act and Biometric Mass Surveillance

In April 2021, the European Commission proposed a law to regulate the use of Artificial Intelligence (AI Act). Since becoming part of the ‘Reclaim Your Face’ coalition, FSE has joined a growing number of organisations which are calling for the act to include a ban on biometric mass surveillance.

Currently, the European Parliament is forming its opinion on the AI Act proposal. In the past, they have supported the demand for a ban, but more pressure is needed. That is why we must raise awareness among politicians about the impact of biometric mass surveillance on fans’ rights and dignity.

What can fans do?

- Research the use of mass surveillance in football and share the findings with other fans. Write to EDRi’s campaigns and outreach officer Belen (belen.luna[at]edri.org) or email info[at]fanseurope.org if your club or local stadium operator deploys facial recognition cameras or other forms of mass surveillance.

- Raise awareness among fans, community organisers, and local politicians as to the prevalence and impact of mass surveillance.

- Organise locally and through national and pan-European representative bodies to contest the use of mass surveillance in football.

- If you are part of an organisation, join the EDRi’s ‘Reclaim Your Face’ coalition.

Further reading

- Burgess, Matt. ‘The Met Police’s Facial Recognition Tests Are Fatally Flawed’, Wired, 4th July 2019 (accessed online at on 10th August 2022)

- European Digital Rights (EDRi) & Edinburgh International Justice Initiative (EIJI) (2021). ‘The rise and rise of biometric mass surveillance in the EU’. (accessed online on 10th August 2022)

- Football Supporters Europe (2022). ‘Facial Recognition Technology: Fans, Not Test Subjects’. (accessed online at on 10th August 2022)

- Football Supporters Europe (2022). ‘FSE Calls On EU Parliament To Protect Citizens From Biometric Mass Surveillance’. (accessed online on 10th August 2022)