Borders, migrants and failed humanitarism

Some of the most chilling biometric systems we have seen are those deployed against people on the move.

Whether it’s for migration, tourism, to see their family, for work, or to seek asylum, people on the move rely on border guards/police and governments to grant them permission to enter.

It should be obvious that they have a right to be treated humanely and with dignity while doing so. However, we know that non-EU nationals traveling into the EU are frequently treated as a frontier for experimentation with biometric technologies.

Governments and tech companies exploit their power to treat people on the move as lab rats for the development of invasive technologies under the guise of “innovation” or “security”. These, sometimes, are subsequently deployed as part of general monitoring systems.

Experimenting on people on the move

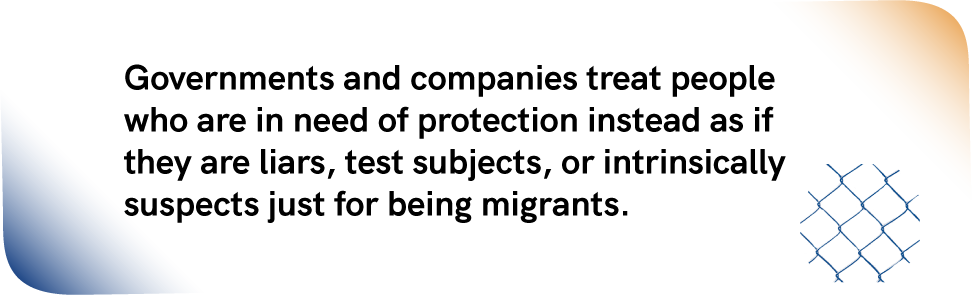

The authorities and companies that are deploying these technologies exploit the secrecy and discretion that surrounds immigration decisions. In result, they experiment with technologies that are worryingly pseudo-scientific and with some that rely on discredited and harmful theories like phrenology.

For instance, real examples from Europe include:

1. The analysis of people’s “micro-expressions” to supposedly detect if they are lying

2. Testing on voices and bones as a way to interrogate their asylum claims.

Experimenting on: people in need of humanitarian aid

Similar patterns to border administration are increasingly being seen in the humanitarian aid context. Aid agencies or organisations sometimes see biometric identification systems as a silver bullet for managing complex humanitarian programmes without properly assessing the risks and the rights implications. This is often driven by the unrealistic promises made by biometric tech companies.

In the context of humanitarian action, people who are relying on aid are rarely in a position to refuse to provide their biometric data, meaning that any ‘consent’ given cannot be considered legitimate.

Therefore, their acceptance could never form a legal basis for these experiments, because they are forced by the circumstance to accept. For people who are not in this position, it would never be legal to act in this way. In humanitarian aid, people’s fragility is exploited. There are also major concerns about how such data are stored. For instance, there are still many questions about how it may be used in ways that are actually incredibly harmful to the people they are claiming to help. If these concerns were not enough, such practices also have a high chance of leading to mass surveillance and other rights violations by creating enormous databases of sensitive data.

WHY SHOULD WE FIGHT BACK?

Not only are these unreliable tests often unnecessarily invasive and undignified, but they treat people who are in need of protection instead as if they are liars, test subjects, or intrinsically suspicious just for being a migrant.

Further more, EU and EU countries fund heavily this. A lot of the money that goes into funding these projects comes from EU agencies or Member States, for example through the EU’s Horizon2020 programme. In their public database, you can read about how EU money is funding biometric experiments that would not be out of place in a science fiction film.

This is happening in tandem with a rise in funding for the EU’s Frontex border agency, which has been accused of violently militarising European borders and persecuting people on the move.

Examples

In Italy, the police have used biometric mass surveillance against people at Italy’s borders and have attempted to roll out a real-time system to monitor potentially the whole population with the aim of targeting migrants and asylum seekers: https://reclaimyourface.eu/chilling-use-of-face-recognition-at-italian-borders-shows-why-we-must-ban-biometric-mass-surveillance/

In the Netherlands, the government has created a huge pseudo-criminal database of personal data which can be used for performing facial recognition solely for the reason that those people are foreign: https://edri.org/wp-content/uploads/2021/07/EDRI_RISE_REPORT.pdf [p.67]

In Greece, the European Commission funded a notorious project called iBorderCTRL which used artificial intelligence and emotion recognition to predict whether people in immigration interviews are lying or not. The project has been widely criticised for having no scientific basis and for exploiting people’s migration status to try out untested technologies: https://iborderctrl.no/