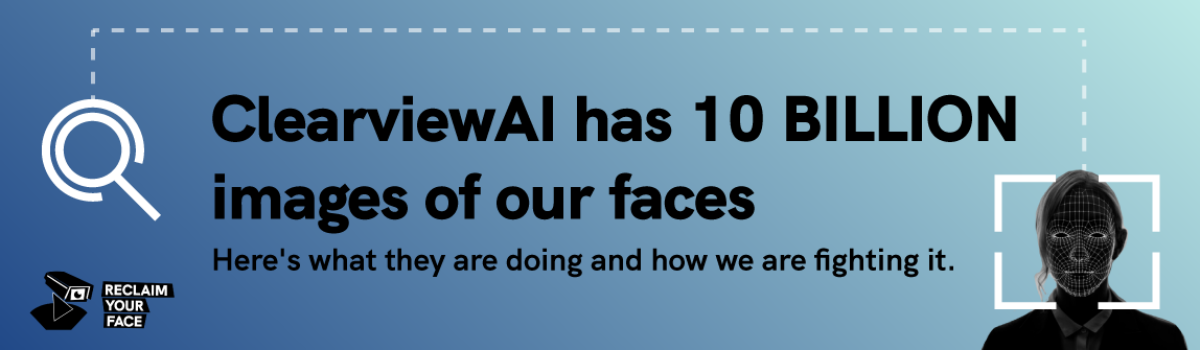

About ClearviewAI’s mockery of human rights, those fighting it, and the need for EU to intervene

Clearview AI describes itself as ‘The World’s Largest Facial Network’. However, a quick search online would reveal that the company has been involved in several scandals, covering the front page of many publications for all the wrong reasons. In fact, since New York Times broke the story about Clearview AI in 2020, the company has been constantly criticised by activists, politicians, and data protection authorities around the world. Read below a summary of the many actions taken against the company that hoarded 10 billion images of our faces.

How did Clearview AI build a database of 10 billion images?

Clearview AI gathers data automatically, through a process called (social media) online scraping. The specific way Clearview AI gathers its data enables biometric mass surveillance, being a practice also adopted by actors such as PimsEyes among others.

The company scrapes the pictures of our faces from the entire internet – including social media applications – and stores them on its servers. Following the gathering and storage of data through online scraping, Clearview AI managed to create a database of 10 BILLION images. Now, the company uses an algorithm that matches a given face to all the faces in its 10B database: (virtually) everyone and anyone.

Creepily enough, this database can be available to any company, law enforcement agency and government that can pay for access.

This will go on, as long as we don’t put a stop to Clearview AI and its peers. Reclaim Your Face partners and other organisations have taken several actions to limit Clearview AI in France, Italy, Germany, Belgium, Sweden, the United Kingdom, Australia and Canada.

In several EU countries many activists, Data Protection Authorities and watchdogs took action.

In May 2021, a coalition of organisations (including noyb, Privacy International (PI), Hermes Center and Homo Digitalis) filed a series of submissions against Clearview AI, Inc. The complaints were submitted to data protection regulators in France, Austria, Italy, Greece and the United Kingdom.

Here are some of the Data Protection Authorities and watchdogs’ decisions:

France

Following Reclaim Your Face Partner’s Privacy International and individual complaints about Clearview AI’s facial recognition software, the French data protection authority (‘CNIL’) decided in December 2021 that Clearview AI should cease collecting and using data from data subjects in France.

Italy

After individuals (including Riccardo Coluccini) and Reclaim Your Face organisations (among them Hermes Centre for Transparency and Digital Human Rights and Privacy Network) filed a complaint against Clearview AI, Italian’s data privacy watchdog (Garante per la Protezione dei dati personali) fined Clearview AI the highest amount possible: 20 million Euros. The decision includes an order to erase the data relating to individuals in Italy and banned any further collection and processing of the data through the company’s facial recognition system.

Germany

Following an individual complaint from Reclaim Your Face activist Matthias Marx, the Hamburg Data Protection Agency ordered Clearview to delete the mathematical hash representing a user’s profile. As a result, the Hamburg DPA deemed Clearview AI’s biometric photo database illegal in the EU. However, Clearview AI has only deleted Matthias Marx’s data and the DPA’s case is not yet closed.

Belgium

While the use of this software has never been legal in Belgium and after denying its deployment, the Ministry of the Interior confirmed in October 2021 that the Belgian Federal Police used the services of Clearview AI. This was derived from a trial period the company provided to the Europol Task Force on Victim Identification. Albeit admitting the use, the Ministry of Interior also confirmed and emphasized that Belgian law does not allow this. This was later confirmed by the Belgian police watchdog ruling that stated its use was unlawful.

Sweden

In February 2021, the Swedish data protection authority (IMY), decided that a Swedish local police’s use of Clearview’s technology involved unlawfully processed biometric data for facial recognition. The DPA also pointed the Police failed to conduct a data protection impact assessment. As such, the authority fined the local police authority €250,000 and ordered to inform people whose personal data was sent to the company.

Clearview AI is in trouble outside of the European Union too

United Kingdom

On 27 May 2021, Privacy International (PI) filed complaints against Clearview AI with the UK’s independent regulator for data protection and information rights law and Information Commissioner’s Office (ICO). Jointly with OAIC, which regulates the Australian Privacy Act, conducted a joint investigation on Clearview AI from 2020. Last year, ICO announced its provisional intent to impose a potential fine of over £17 million based on Clearview’s failure to comply with UK data protection laws.

In May 2022, ICO issued a fine to Clearview AI Inc of £7,552,800 and an enforcement notice, ordering the company to stop obtaining and using the personal data of UK residents that is publicly available on the internet, and to delete the data of UK residents from its systems.

Australia

On the other hand, OAIC has reached a decision and ordered the company to stop collecting facial biometrics and biometric templates from people in Australian territory; and to destroy all existing images and templates that it holds.

Canada

Canadian authorities were unequivocal in ruling that Clearview AI was a violation of their citizen’s right to privacy, and furthermore, that this use constitutes mass surveillance. Their statement highlights the clear link between ClearviewAI and biometric mass surveillance and assumes that all citizens are suspects of crime.

War and Clearview AI

In an already distressing war context, Ukraine’s defence ministry is using Clearview AI’s facial recognition technology for allegedly vetting people at checkpoints, unmasking Russian assailants, combating misinformation and identifying the dead.

What happens when Clearview AI decides to offer its services to military forces with whom we disagree?

It is deeply worrying that Clearview AI’s technologies are reportedly being used in warfare. What happens when Clearview AI decides to offer its services to military forces with whom we disagree? What does this say about the geopolitical power we allow – as a society – for surveillance of private actors? By allowing Clearview AI into military operations, we are opening Pandora’s box for technologies that have been ruled incompatible with people’s rights and freedoms to be deployed into a situation of literal life-or-death. Clearview AI’s systems show documented racial bias and have facilitated several traumatic wrongful arrests of innocent people around the world. Even a system that is highly accurate in lab conditions will not perform as accurately in a war zone. This can lead to fatal results.

Clearview AI mocks national data protection authorities. We must act united! The EU must step up and use the AI Act to end the mocking of people’s rights and dignity.

Pressure is mounting, but Clearview AI is not stepping down. Instead, the company started as a service for law enforcement uses only, but is now telling investors they are extending towards the monitoring of gig workers – among others.

All the data protection authorities mentioned above have strong arguments to justify their decisions. In response to fines, Clearview AI’s CEO issues statements that mock all the fines national authorities issue.

National agencies and watchdogs cannot reign in Clearview AI alone. The EU must step in and ensure Clearview AI does not also mock the fundamental nature of human rights.

Reclaim Your Face campaigns to stop Clearview AI and any other uses of technology that create biometric mass surveillance.

Further readings:

- CNIL decision France: https://www.cnil.fr/sites/default/files/atoms/files/decision_ndeg_med_2021-134.pdf

- SA decision Italy: https://www.gpdp.it/web/guest/home/docweb/-/docweb-display/docweb/9751362

- Statement by Canadian privacy watchdog: https://www.priv.gc.ca/en/opc-news/news-and-announcements/2021/nr-c_210203/?=february-2-2021

- Australian privacy regulator decision: https://www.oaic.gov.au/__data/assets/pdf_file/0016/11284/Commissioner-initiated-investigation-into-Clearview-AI,-Inc.-Privacy-2021-AICmr-54-14-October-2021.pdf

- IMY decision (in Swedish only): https://www.imy.se/globalassets/dokument/beslut/beslut-tillsyn-polismyndigheten-cvai.pdf

- Hamburg’s DPA decision (in German only): https://noyb.eu/sites/default/files/2021-01/545_2020_Anh%C3%B6rung_CVAI_ENG_Redacted.PDF